借助

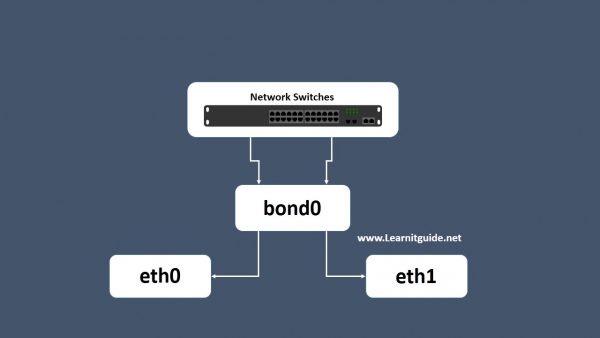

bonding将多个物理网卡创建为单个虚拟逻辑网卡

1. 什么是 bonding

网卡bond是通过多张网卡绑定为一个逻辑网卡,实现本地网卡冗余、带宽扩容和负载均衡。内核版本2.4.12以上均提供了bonding模块,之前的版本可以通过patch实现。

2. 检查并开启 bonding 模块

首先检查内核是否支持bonding:

> cat /etc/redhat-release

CentOS Linux release 7.8.2003 (Core)

> uname -r

3.10.0-1127.el7.x86_64

> cat /boot/config-3.10.0-1127.el7.x86_64 | grep -i bonding

CONFIG_BONDING=m

可以看到当前内核支持bonding,查看bonding模块信息:

> modinfo bonding | less

filename: /lib/modules/3.10.0-1127.el7.x86_64/kernel/drivers/net/bonding/bonding.ko

.xz

author: Thomas Davis, tadavis@lbl.gov and many others

description: Ethernet Channel Bonding Driver, v3.7.1

version: 3.7.1

license: GPL

alias: rtnl-link-bond

retpoline: Y

rhelversion: 7.8

srcversion: 02BB340820F6F1A042A3033

depends:

intree: Y

vermagic: 3.10.0-1127.el7.x86_64 SMP mod_unload modversions

......

启用bonding模块,并查看是否加载:

> modprobe bonding

> lsmod | grep bonding

bonding 152979 0

3. 创建 bond0 接口配置文件

Dell PowerEdge R730 服务器拥有四路全双工千兆网口,先查看当前的网络连接与设备:

> nmcli c show

NAME UUID TYPE DEVICE

em1 d4d8562f-91e2-4f9f-af48-485d2b0d744f ethernet em1

em2 d82f2490-8fa3-49b9-8e40-275bec92d230 ethernet em2

em3 24c1fdcd-56b2-4d09-ab1b-ab347a73a3ad ethernet em3

em4 31a2eadc-2528-4f4f-907e-35a5801090a2 ethernet --

> nmcli d status

DEVICE TYPE STATE CONNECTION

em1 ethernet connected em1

em2 ethernet connected em2

em3 ethernet connected em3

em4 ethernet unavailable --

bond0 bond unmanaged --

lo loopback unmanaged --

可以看到此时系统中已经创建了bond0设备,因为NetworkManager在/etc/sysconfig/network-scripts/目录下没有找到bond0对应的配置文件ifcfg-bond0,所以当前处于unmanaged状态。

创建ifcfg-bond0配置文件,并输入以下内容:

> vim /etc/sysconfig/network-scripts/ifcfg-bond0

> cat /etc/sysconfig/network-scripts/ifcfg-bond0

DEVICE=bond0

TYPE=Bond

NAME=bond0

BOOTPROTO=none

ONBOOT=yes

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

IPADDR=xxx.xxx.xxx.xxx

PREFIX=24

GATEWAY=xxx.xxx.xxx.xxx

DNS1=xxx.xxx.xxx.xxx

BONDING_MASTER=yes

BONDING_OPTS="mode=6 miimon=100"

之后创建em1和em2对应的 Slave 配置文件:

> cat /etc/sysconfig/network-scripts/ifcfg-bond-slave-em1

TYPE=Ethernet

NAME=bond-slave-em1

DEVICE=em1

ONBOOT=yes

MASTER=bond0

SLAVE=yes

> cat /etc/sysconfig/network-scripts/ifcfg-bond-slave-em2

TYPE=Ethernet

NAME=bond-slave-em2

DEVICE=em2

ONBOOT=yes

MASTER=bond0

SLAVE=yes

最后,将ifcfg-em1和ifcfg-em2中的ONBOOT=yes替换为ONBOOT=no:

> sed -i '/ONBOOT/c ONBOOT=no' /etc/sysconfig/network-scripts/ifcfg-em1

> sed -i '/ONBOOT/c ONBOOT=no' /etc/sysconfig/network-scripts/ifcfg-em2

重启网络服务:

> systemctl restart network

4. 检查 bonding 状态

检查当前的bonding状态:

[root@idv-node1 ~] cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: adaptive load balancing

Primary Slave: None

Currently Active Slave: em1

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: em1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 18:66:da:f3:b4:28

Slave queue ID: 0

Slave Interface: em2

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 18:66:da:f3:b4:29

Slave queue ID: 0

使用ethtool查看bond0速率,已达到2000Mb/s:

[root@idv-node1 ~] ethtool bond0

Settings for bond0:

Supported ports: [ ]

Supported link modes: Not reported

Supported pause frame use: No

Supports auto-negotiation: No

Supported FEC modes: Not reported

Advertised link modes: Not reported

Advertised pause frame use: No

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 2000Mb/s

Duplex: Full

Port: Other

PHYAD: 0

Transceiver: internal

Auto-negotiation: off

Link detected: yes

参考文章

🚩推荐阅读(由hexo文章推荐插件驱动)